How We Built a Pittsburgh Tech-focused MCP Server

A local hackathon story from AI @ Work.

Flashback: It’s the beginning of 2025, and you’re just getting back into it after the holidays. Slowly but surely, you fall back into your routines again, start reading your newsletters, and paying attention to what people are talking about on LinkedIn (well…some of it at least). And before long, you’ve heard people mention “MCP” one too many times: “Model Context Protocol.”

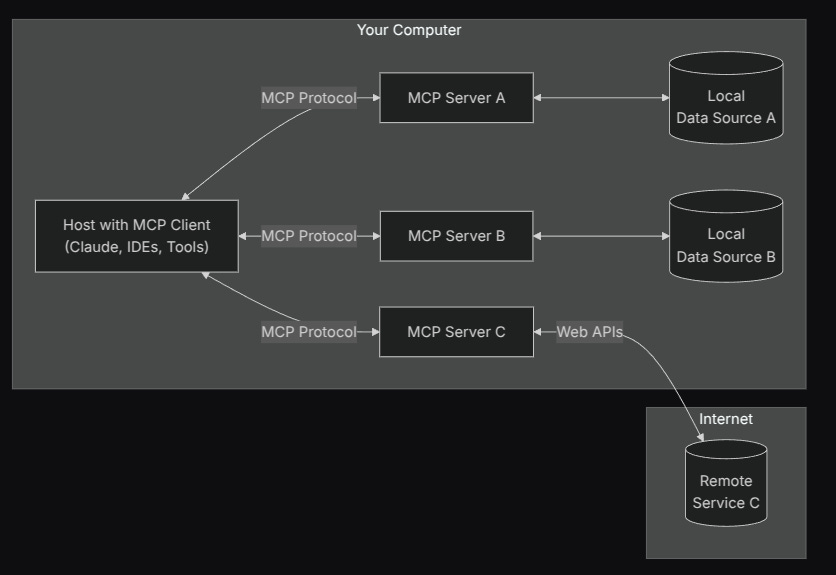

You do some research and find plenty of insightful articles talking about how MCP is going to be the future standard for how AI agents will interact with tools—no need to custom build and maintain tools for your agent. Soon, everything will just have an MCP server that you can connect your agent to, and it will “just work.” Sounds great! But how does something that “just works” actually work?

If you’re like the folks at AI @ Work, a Pittsburgh-local collective of AI builders, you can only talk about something for so long before you go and do something about it. So that’s what we did—as MCP hype reached a peak in late March, we set out to run a mini hackathon to learn what really was involved in building and using an MCP server. But we also wanted it to be something that would be useful to the broader community—something that, if we built well, could become a part of how you operate in your day-to-day life.

Building an MCP Server for Pittsburgh Tech/Startup Events

We settled on tackling a simple concept that can quickly become frustrating—helping people navigate all the different tech and startup events happening around Pittsburgh. We felt this was a doable topic that still would be valuable to many people in this community. Our approach was to make an MCP wrapper around a database that contained as many Pittsburgh tech-related events as we could find. This could then be plugged into an agent, giving you the ability to quickly learn more about what events you could attend and even add your own if you wanted.

Finding the Events and Populating the Database

We didn’t need nor want to start from scratch here. There are many great resources out there that list events. Our goal was to make something that consolidates them, allows an agent to interact with them, and brings more awareness to those existing resources. Speaking of these resources, shout outs to these great publications that we used in building this:

Charles Mansfield's calendar at InnovationPGH

This blog!

We wanted to make sure our events were kept up to date, so we used two low-code tools—one to scrape websites (Skyvern), and another to orchestrate triggers and populate our database (Make). The scraped event info was then transformed into a standardized format in AirTable for MCP use.

Wrapping an MCP Server Around the Events Database

Simply aggregating the events wasn’t enough for us. We wanted to test out MCP’s ability to make this kind of data universally accessible by an agent so you could do whatever you wanted with those capabilities. Over a few evenings, we spun up a small, but fully functional “events MCP” that allows any agent to search and create Pittsburgh tech events in our AirTable db.

What We Actually Built

MCP Server

We forked Cloudflare’s remote‑mcp‑server template (TypeScript + Hono) and deployed it with Wrangler to Cloudflare Workers. Cloudflare was the cheapest host that natively supports Server‑Sent Events (SSE), which MCP clients need for streaming tool calls (at least, they used to—now it seems to have already moved to Streamable HTTP?)

Tools (API endpoints)

Inside the Worker we exposed two thin, purpose‑built tools:

search_events(start_date, end_date, location, free) → returns matching rows from Airtable

create_event({...}) → inserts a new row

We started with Composio’s generic get‑airtable‑records helpers, but switching to these more narrow tools gave the LLM fewer chances to get the schema or permissions wrong.

Local Dev & Testing

Claude Desktop talks STDIO, so during development we just ran the MCP server locally with “npm run dev”; for remote demos we pointed Claude at our Cloudflare endpoint via npx mcp-remote https://…/sse. A couple of us also experimented with a Python prototype and the community mcp‑gateway, but the TypeScript path had the least friction.

Key Takeaways

MCP works, but the tooling is young. We hit wrinkles with Claude ignoring an MCP that wasn’t started before itself, Cloudflare env‑var quirks, and Durable‑Object quotas.

LLMs are surprisingly good at wiring prompts to tool args. We never had to add a separate “date calculator” tool; Claude inferred ISO dates from “next two weeks” just fine.

Granular, task‑specific tools beat raw CRUD. Limiting the surface area (just “search” and “create”) avoided accidental writes and kept the agent’s reasoning predictable.

SSE hosting matters. Railway + Docker worked but cost more; Cloudflare’s $5 Worker plan gave us a public URL with streaming out of the box.

End Result

Anyone can drop the MCP URL into Claude or other agent builders, ask “Show me free AI meetups next month” or “Add a Women in Tech happy‑hour on June 5,” and the agent should handle the rest—no API keys, no manual Airtable wrangling required. (By the way, you bet I just copy/pasted our entire hackathon dev Slack conversation into ChatGPT to help write this technical section.)

Putting It into Action

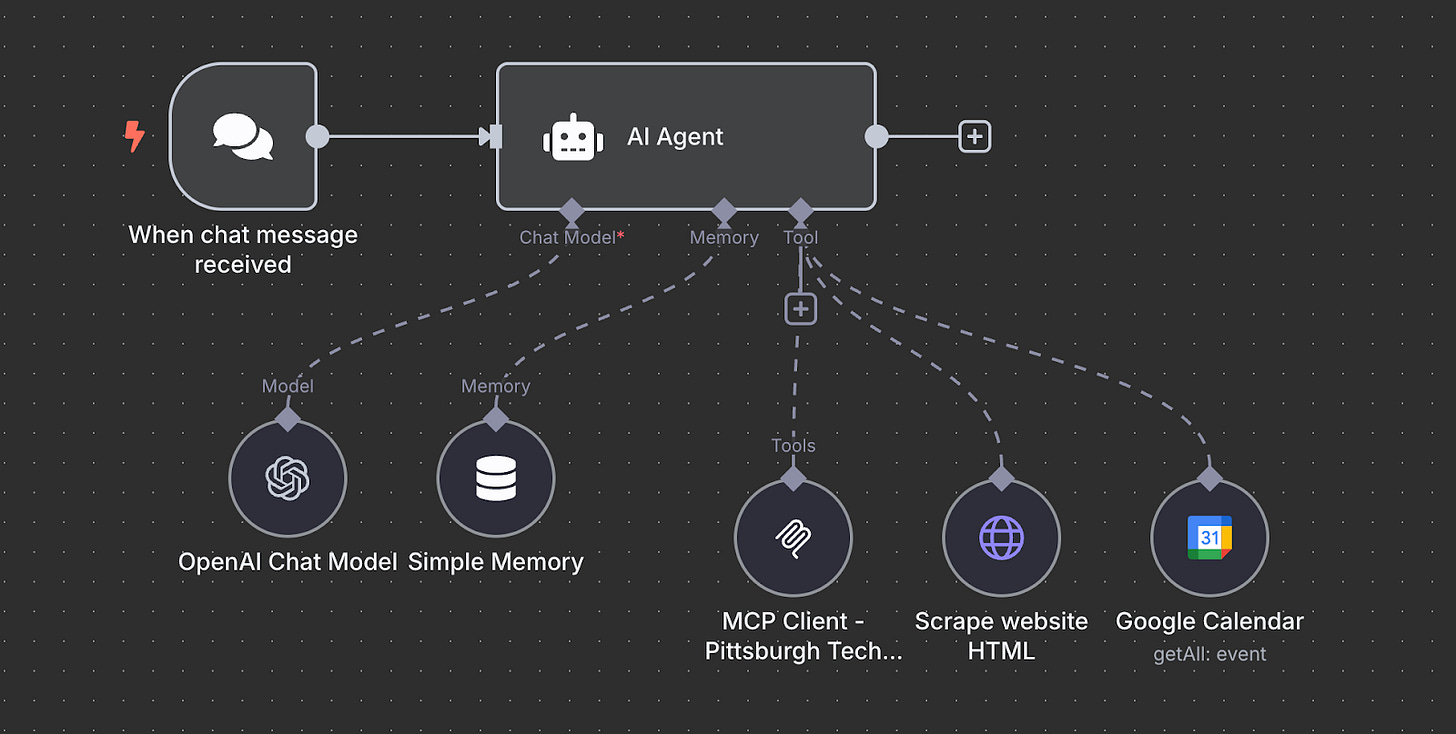

With the MCP now live, we wanted to show some examples of how it could be used, so we made a simple n8n agent that had access to not just this new MCP, but also a personal calendar and a web scraper tool. Here’s where agents get fun. With the right data and context, you can offload the manual task of “finding the right things to attend” to AI instead.

We used a simple chat UI right in n8n to interact with it. It’s powered by OpenAI’s GPT-4.1 model, and has memory so you can chat back and forth with it. But even a simple agent like this shows the potential in well-designed MCP:

Luckily, taking out the garbage isn’t a show stopper (usually), so we can go to PyCon! And this is just scratching the surface—you can set up automations to compare your calendar and the MCP each week and summarize the events you can attend, but filter it down to just events on a specific topic. Ask the agent to create a plan for events based on location, and it will surface only events that are <10 miles from where you’ll be each day. So go ahead, give it your current location and ask it if anything’s happening nearby that you should go to! The world (burgh) is your oyster (pierogi)!

But that’s not all. Since part of what makes MCP powerful is multiple tools, we can also use this same MCP to add events to the PGH Tech calendar so anyone can benefit. Here, I simply asked it to go look at Charles’ calendar and add anything that wasn’t there. The agent used all the necessary tools within the MCP to do it perfectly.

How Does MCP Hold Up? And Should You Dive Deeper into It?

So what did we learn about MCP? Well, it’s got promise. True standards are powerful, but it’s difficult for anything to truly become the standard. MCP has potential and momentum. We showed that it can work and be built, and the benefits of it when it’s all stood up. But we did hit a few snags in getting it up and running, and in the most desperate hackathon hours, we were sometimes left wondering why an MCP was really any better than a simple tool built around an API. There are already multiple tools in it, but if/when we add more concepts to this Pittsburgh Tech MCP (like a job opening aggregator), having one place where all these concepts live as tools becomes significantly more valuable. You only need to add it to your agent the first time around and it updates with more features automatically. There’s also the fact that an MCP should be able to tell your agent how to use it and stay updated. This allows you to avoid manually defining endpoints and parameters in your API tool, then having to remember to keep them updated as well.

Bottom line: Developing a better understanding of MCP is absolutely worth it. Even if it doesn’t take off as the standard, something like it will. And the concepts that make it potentially valuable can only make your own AI and agents better as well.

Want to give the Pittsburgh Tech MCP a try? Email team@aiatworkpgh.com for beta access. And don’t hesitate to reach out to the AI @ Work group with questions or other ideas. For more information, visit http://www.aiatworkpgh.com/.

Lastly, a huge thank you and shout out to all who were involved in this project: April Lutheran, Austin Orth, Benjamin Kostenbader, Dan Gorrepati, Handerson Gomes, Ian Cook, Luisa Rueda, Matt Nicosia, Michelle Powell, and Rex Harris.