Dissecting Agentic Site Reliability Engineering - Why the Hype Misses the Mark

A local expert's perspective on a current AI trend.

The Hype Cycle

Agentic Site Reliability Engineering (SRE) is the latest craze proliferating through Silicon Valley's AI technosphere. The Valley's deepest venture capital pockets are ponying up hundreds of millions to chase this new AI agent use case.

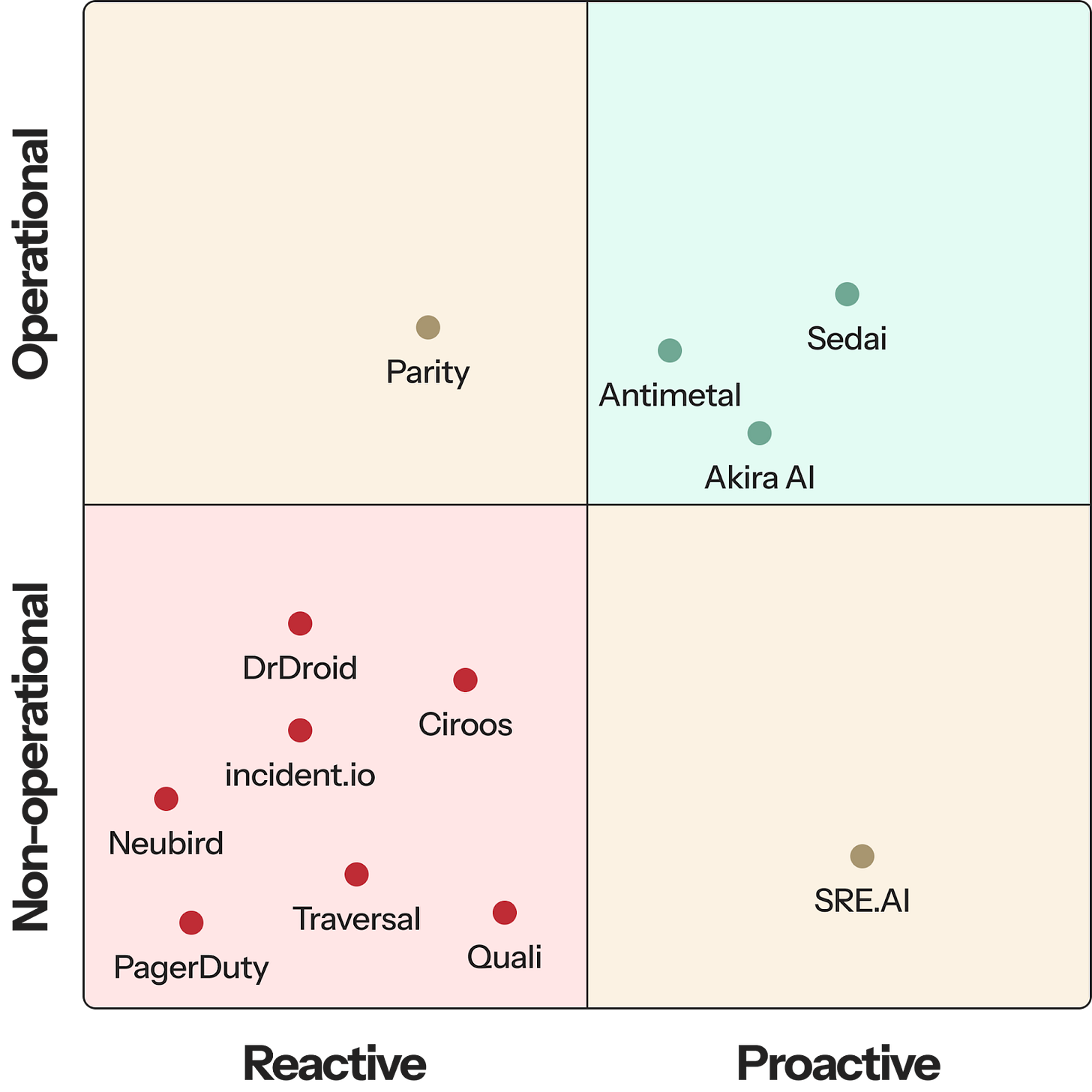

Both Traversal AI and Ciroos AI recently emerged from stealth to throw their hat in the Agentic SRE ring, having raised $48M and $21M Series A rounds respectively. They join the ranks of about ten other startups like Antimetal, Parity, and Neubird, as well as tech blue chips like Datadog and Microsoft.

But what is Agentic SRE, and why has it emerged as one of the hottest AI investments in an already bullish market? If you ask these startups, you won't get a straight answer.

What Do SREs Actually Do?

Site Reliability Engineers (SREs) are the engineers responsible for software uptime. The application in question doesn't matter; whether a mobile app, a web backend, or an IoT fleet, it's the SREs who make sure production is humming along.

They "keep the lights on" for the business, so to speak, dealing with production releases, observability, application instability, and incident triage and response. They also build tools to ensure the lights never go off in the first place.

The term originated with Google in the early 2000s but was popularized in 2016 with the release of the Site Reliability Engineering O'Reilly book. The book positions SRE as an evolution of the DevOps role—where DevOps engineers might be relegated to keeping the lights on and nothing more, SREs are empowered to advance the platform, proactively improving application stability and observability. “Proactive” in this sense is a natural follow-on to the broader “shift-left” mentality in the industry, where responsibility for quality and reliability is shifted earlier in the development cycle.

Ultimately, I submit that the SRE role can be broken down into two broad job functions:

Reactive work: responding to alerts, mitigating incidents, conducting postmortems, and documenting the results in playbooks or runbooks.

Proactive work: improving observability tooling, orchestrating canary deployments, advancing application stability, and capacity planning.

Where Agentic SREs Fall Short

With this in mind, you might be tempted to think that Agentic SREs (AI agents that are meant to fulfill the role of an SRE) also have a dual mandate: keep the application running and prevent future incidents. At the very least, you'd expect the AI agent to resolve incidents, autonomously scan logs, match them to runbooks, and mitigate an outage before the human operator gets paged.

In reality, most of these tools do little more than write your Elasticsearch queries for you. Traversal, for example, explicitly advertises that its agent only has read-only access to your production metrics and logs. Similarly, Neubird’s flagship product Hawkeye diagnoses outage root causes but stops short of taking action. Bits AI SRE by Datadog and Incident.io's AI SRE do the same.

Curiously, these agentic SREs are exclusively focused on the reactive SRE job function (i.e. incident response). Beyond that, they're nearly all nonoperational in that they don't perform the tasks required to resolve the incident.

An engineer shelling out $415/month for PagerDuty's AI SRE can expect to respond to the same number of pages as before. The only difference: they'll now have a summary of the incident and suggestions for remediation waiting when they log on. That's certainly not nothing, but it's a far cry from replacing the human SRE in the loop.

Three Limitations of Today’s AI SREs

So why is it that the vast majority of AI SREs are neither operational nor proactive? There are three primary reasons.

1. RAG.

The first reason is historical: AI SREs sprang up because of the sudden accessibility of RAG. With RAG, the agent provider can ingest your playbooks and log data, vectorize them, and toss them into a relational database.

When an alarm sounds, an LLM can generate queries to fetch the most relevant vectorized logs and metric data. You can then prompt it to analyze the output of those queries and hypothesize one or more root causes. The RAG index query serves as a high-pass filter, while the LLM serves as the low-pass filter to remove noise from the observability data.

Because these systems depend on that specific technological advancement, they're limited by what RAG needs to function. Without observability data, the AI can't "suppose" where the next incident will come from, so it can't proactively suggest improvements. This is why all existing AI SREs are reactive alone.

2. They're fighting yesterday's war.

When an AI sees an incident for the first time, and it's going in blind, it has little chance of diagnosing the root cause from the infinite number of possibilities.

Seasoned SREs know a large part of the job is keeping the context of the entire weave of microservices—including the history of its development and interaction—in their heads while resolving an incident. The job requires a lot of tribal knowledge, which is why they document that knowledge in playbooks and postmortems.

An agent can ingest human-authored playbooks to equip itself to diagnose known incident types, but it's the unknown and unexpected incidents that require tacit knowledge to resolve. When a code change creates a new type of incident, only the human has the necessary context to filter the signal from the noise.

As a result, AI SREs will only be capable of resolving known and well-understood incident types. They would be ineffective at taking operational actions to resolve new incidents. To avoid false positives, current AI SREs defer to humans in all cases rather than risk overreaching.

3. Operational agents are dangerous.

Finally, a reactive, operational agent is simply a terrible idea.

Picture this: it's 5:00 AM and your pager goes off. There's an outage in production. You roll out of bed and consider putting on a pot of coffee. Looking at your phone, you see the agent has been thrashing against this incident for the better part of an hour.

By the time you log on, the AI has restarted pods, tainted and replaced cluster nodes, rolled SSL certs, and flushed the logs. Any chance you had to determine the root cause has long since passed.

An operational agent with the keys to production that's able to independently resolve issues with 95% accuracy will wreak havoc 5% of the time. And when things go wrong, it will always be catastrophic.

It's like watching a Roomba glide over the chalk outline at a crime scene: you lose all ability to detect what caused the issue, and you'll be trying to fix one problem while the agent is causing three more. The last thing any SRE wants is to have an AI running amok in production.

The Better Path Forward

This is why agentic SREs are missing the mark. They're focusing on the wrong job functions of the SRE.

Instead, these AI agents should be focusing on proactive, operational SRE tasks: monitoring canary deployments and rolling back before an incident is called, improving distributed trace capture with richer metadata, or fine-tuning alert thresholds to reduce false positives and false negatives. After all, an ounce of prevention is worth a pound of cure.

Consider canary deployments as an example of an alternative. If you use an open source model to run your AI agent on GitHub Actions, your agent can monitor your canary deployment for 180x cheaper than having a DevOps engineer on log-watching duty (using Glassdoor average salary data as a point of reference).

Ideally, you want to equip the agent with tasks that are safe to execute independently. Rolling back a stateless deployment is a safe task. If the agent mistakes a good deployment for a bad one and initiates a rollback, you simply return to what was already running in production. No harm done.

To Wrap It Up

All in all, the current cohort of AI SREs have a long way to go before they can scratch even half of the dual mandate of the SRE. This round of products misses the mark, but not all hope is lost. There are plenty of good opportunities for AI SREs to proactively operationalize the more manual labor of the SRE job.

Really interesting! Also very scary to think about what an agent could do if given control at that level. Possibly very productive, but possibly very destructive...